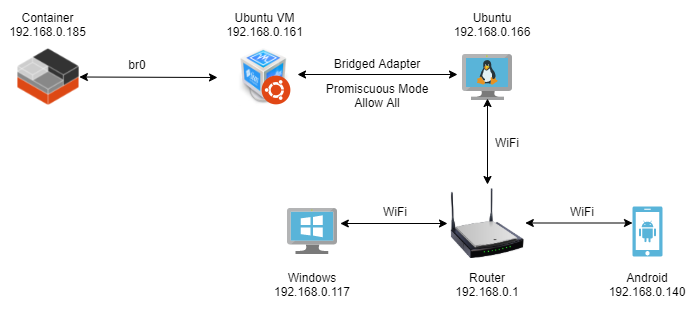

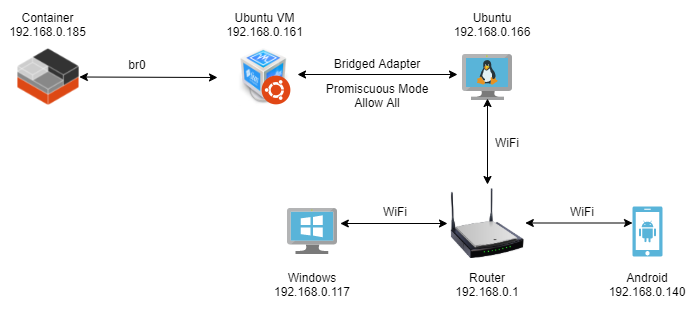

First of all let me tell what network configuration I am trying to achieve:

- LXC container should be exposed to my local network

- LXC container should have internet access

Here's what I have been able to achieve so far:

I have referred to Converting eth0 to br0 and getting all your LXC or LXD onto your LAN | Ubuntu to achieve requirement #1 in the above configuration. However, LXC does not have internet connection.

Some other observations:

- I am able to ping to & from all devices on the network except the LXC container.

- I am able to ping to & from LXC container to it's host: the Ubuntu VM.

I referred to some topics on this forum which are relevant to my problem, but no avail. Any ideas as to how to enable internet access inside container?

On Host (Ubuntu VM)

ajinkya@metaverse:~$ lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 20.04.2 LTS

Release: 20.04

Codename: focal

ajinkya@metaverse:~$ lxc-ls --version

4.0.6

ajinkya@metaverse:~$ uname -a

Linux metaverse 5.11.0-25-generic #27~20.04.1-Ubuntu SMP Tue Jul 13 17:41:23 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

---------------------------------------------

ajinkya@metaverse:~$ ip a show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: enp0s3: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel master br0 state UP group default qlen 1000

link/ether 08:00:27:db:a6:da brd ff:ff:ff:ff:ff:ff

3: br0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 08:00:27:db:a6:da brd ff:ff:ff:ff:ff:ff

inet 192.168.0.161/24 brd 192.168.0.255 scope global dynamic br0

valid_lft 85283sec preferred_lft 85283sec

inet6 fd01::124:c625:211:6384/64 scope global temporary dynamic

valid_lft 251sec preferred_lft 251sec

inet6 fd01::a00:27ff:fedb:a6da/64 scope global dynamic mngtmpaddr

valid_lft 251sec preferred_lft 251sec

inet6 fe80::a00:27ff:fedb:a6da/64 scope link

valid_lft forever preferred_lft forever

4: veth1000_JY5u@if2: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue master br0 state UP group default qlen 1000

link/ether fe:03:d9:b4:05:29 brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet6 fe80::fc03:d9ff:feb4:529/64 scope link

valid_lft forever preferred_lft forever

---------------------------------------------

ajinkya@metaverse:~$ brctl show

bridge name bridge id STP enabled interfaces

br0 8000.080027dba6da no enp0s3

veth1000_JY5u

---------------------------------------------

ajinkya@metaverse:~$ cat /etc/default/lxc-net

# This file is auto-generated by lxc.postinst if it does not

# exist. Customizations will not be overridden.

# Leave USE_LXC_BRIDGE as "true" if you want to use lxcbr0 for your

# containers. Set to "false" if you'll use virbr0 or another existing

# bridge, or mavlan to your host's NIC.

USE_LXC_BRIDGE="false"

# If you change the LXC_BRIDGE to something other than lxcbr0, then

# you will also need to update your /etc/lxc/default.conf as well as the

# configuration (/var/lib/lxc/<container>/config) for any containers

# already created using the default config to reflect the new bridge

# name.

# If you have the dnsmasq daemon installed, you'll also have to update

# /etc/dnsmasq.d/lxc and restart the system wide dnsmasq daemon.

LXC_BRIDGE="lxcbr0"

LXC_ADDR="10.0.3.1"

LXC_NETMASK="255.255.255.0"

LXC_NETWORK="10.0.3.0/24"

LXC_DHCP_RANGE="10.0.3.2,10.0.3.254"

LXC_DHCP_MAX="253"

# Uncomment the next line if you'd like to use a conf-file for the lxcbr0

# dnsmasq. For instance, you can use 'dhcp-host=mail1,10.0.3.100' to have

# container 'mail1' always get ip address 10.0.3.100.

#LXC_DHCP_CONFILE=/etc/lxc/dnsmasq.conf

# Uncomment the next line if you want lxcbr0's dnsmasq to resolve the .lxc

# domain. You can then add "server=/lxc/10.0.3.1' (or your actual $LXC_ADDR)

# to your system dnsmasq configuration file (normally /etc/dnsmasq.conf,

# or /etc/NetworkManager/dnsmasq.d/lxc.conf on systems that use NetworkManager).

# Once these changes are made, restart the lxc-net and network-manager services.

# 'container1.lxc' will then resolve on your host.

#LXC_DOMAIN="lxc"

---------------------------------------------

ajinkya@metaverse:~$ cat /etc/lxc/default.conf

lxc.net.0.type = veth

lxc.net.0.link = br0

lxc.net.0.flags = up

lxc.net.0.hwaddr = 00:16:3e:xx:xx:xx

---------------------------------------------

ajinkya@metaverse:~$ cat .config/lxc/default.conf

lxc.include = /etc/lxc/default.conf

lxc.idmap = u 0 100000 65536

lxc.idmap = g 0 100000 65536

---------------------------------------------

ajinkya@metaverse:~$ lxc-ls --fancy

NAME STATE AUTOSTART GROUPS IPV4 IPV6 UNPRIVILEGED

con1 RUNNING 0 - 192.168.0.185 fd01::216:3eff:fe67:d14e true

---------------------------------------------

On container

root@con1:/# lsb_release -a

No LSB modules are available.

Distributor ID: Ubuntu

Description: Ubuntu 16.04.7 LTS

Release: 16.04

Codename: xenial

root@con1:/# uname -a

Linux con1 5.11.0-25-generic #27~20.04.1-Ubuntu SMP Tue Jul 13 17:41:23 UTC 2021 x86_64 x86_64 x86_64 GNU/Linux

---------------------------------------------

root@con1:/# ip a show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP group default qlen 1000

link/ether 00:16:3e:67:d1:4e brd ff:ff:ff:ff:ff:ff link-netnsid 0

inet 192.168.0.185/24 brd 192.168.0.255 scope global eth0

valid_lft forever preferred_lft forever

inet6 fd01::216:3eff:fe67:d14e/64 scope global mngtmpaddr dynamic

valid_lft 272sec preferred_lft 272sec

inet6 fe80::216:3eff:fe67:d14e/64 scope link

valid_lft forever preferred_lft forever

---------------------------------------------

root@con1:/# ping google.com

ping: unknown host google.com

---------------------------------------------

root@con1:/# ping 192.168.0.161

PING 192.168.0.161 (192.168.0.161) 56(84) bytes of data.

64 bytes from 192.168.0.161: icmp_seq=1 ttl=64 time=0.115 ms

64 bytes from 192.168.0.161: icmp_seq=2 ttl=64 time=0.133 ms

64 bytes from 192.168.0.161: icmp_seq=3 ttl=64 time=0.189 ms

64 bytes from 192.168.0.161: icmp_seq=4 ttl=64 time=0.127 ms

^C

--- 192.168.0.161 ping statistics ---

4 packets transmitted, 4 received, 0% packet loss, time 3069ms

rtt min/avg/max/mdev = 0.115/0.141/0.189/0.028 ms

---------------------------------------------